Feature

Unlocking Industrial AI with Modern Data Ops

Akshata Agarwal, Robert Gallenberger

August 14, 2025

Category

Deep Dive

Published

August 14, 2025

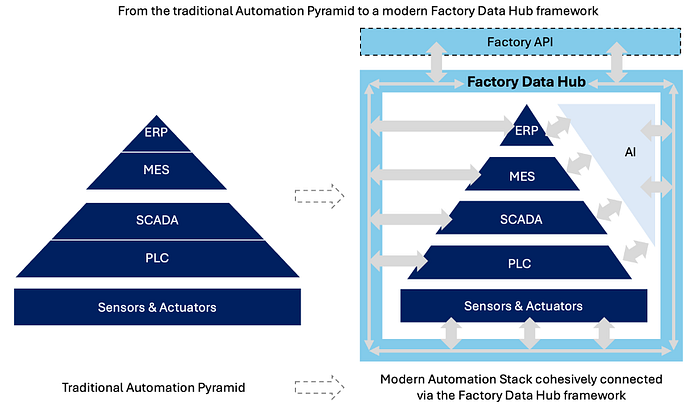

With the advent of Industry 4.0 and as we pave way for a production system driven by agentic AI, the current state of the factory floor is being challenged. As we embed newer hardware in manufacturing combined with an ever-increasing no. of sensors and aim for adaptive collaboration between humans & robots in the pursuit of industrial gold that is efficiency, the OT/IT Automation Pyramid needs to undergo a transformation from being rigid, local & hierarchical (Traditional Automation Pyramid) to a dynamic, interconnected Factory Data Hub framework, enabling a real-time multi-lateral information flow (Modern Automation Stack), as visualized by below.

In our version of the modern automation stack, a defining change is that the traditional stack is united by a Factory Data Hub that is the underlying data infrastructure that connects all the layers to eliminate data silos and bring agility into the tech stack. In collaboration with Matterwave Ventures, this thought piece focuses on the Factory Data Hub and what it’ll take to establish a performant and future proof data infrastructure for smart factories.

Unlocking Industrial AI with Modern Data Ops

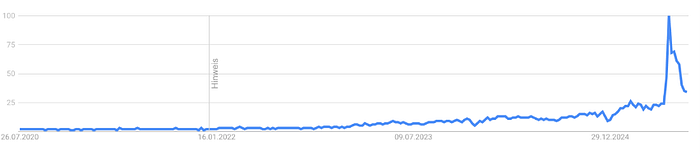

After last year’s surge in Industrial AI as can be seen in the Google Keyword Search results below, it was only a matter of time before smart teams turned their focus to the industrial complex. And it finally arrived: In recent months, Industrial AI and agents have become a hot topic in investor circles and funding rounds — fueling visions of smart factories run by intelligent systems.

But there seems to be a disconnect: Most factories haven’t even completed the Industry 4.0 transition. Brownfield sites remain stuck in early-stage automation, and fewer than 15% of greenfield plants can be considered truly digitally transformed1.

In a world in which AI might serve as key to unlock an urgently needed productivity gain without causing downtime, OT/IT connectivity on the shopfloor must evolve from a backend concern to a strategic priority. In this piece, we explore why connectivity is still the missing link — and what it needs to become to support the next wave of industrial intelligence.

New Buzz, Old Problem

Most factories still rely on equipment and systems that were never designed to work together. From legacy assets out of the 80s to newer assets that come with embedded sensors and modern interfaces, factory floors are a complex patchwork of machines and systems built over decades. To make matters more complicated, the level of digitalization varies not just from company to company, but from site to site — and even more so across countries. But manufacturing is not just “horizontally” disconnected. Each system itself is designed to use its own communication protocols; system controls; collect and store data in proprietary data bases or historians or run on SCADA tools tailored to local setups and plant-specific use cases. There’s no global standard, no universal interface, and no consistent way to access or move data between machines, systems, or locations3.

As a result, many manufacturers face the same core issue: their data is technically there, but practically unusable. It’s trapped in closed systems, scattered across tools that don’t speak the same language, and difficult to extract. This makes any large-scale AI or automation project difficult to start, let alone scale.

Data Infrastructure as one of the key opportunities in the Factory tech stack

How do we address this challenge and give our industries a real shot at becoming future proof? Fortunately, this issue isn’t being overlooked (anymore) — there’s a growing ecosystem actively working on diverse solutions. Before exploring how various players are tackling it, we first turn our attention to the evolving architecture of the ‘Factory Data Hub’: a foundational data stack that integrates seamlessly with the broader factory tech stack and is essential for launching and scaling AI-driven applications.

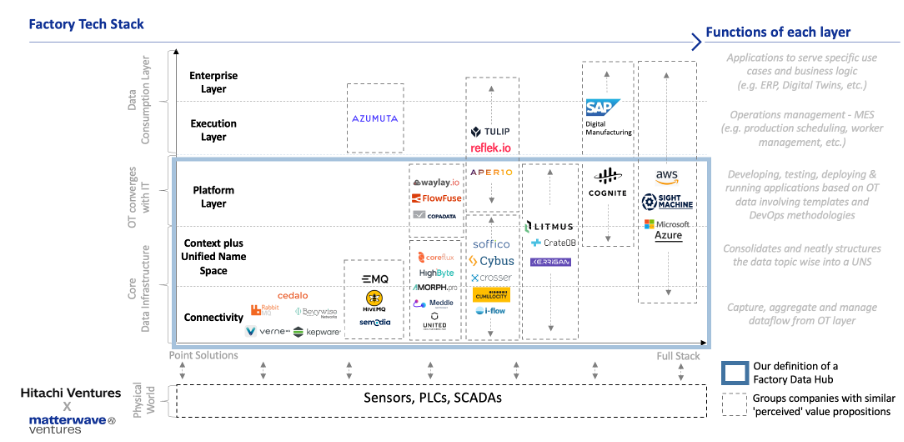

For context, in the software stack represented below, as you go upwards each of the layers builds on the previous one. The part of the stack from the Connectivity to the Platform layer is what we call the ‘Factory Data Hub’ and is the foundation for smart factories. As the shop floors undergoes increasing digitization through adoption of new technologies, the need for a well-defined interface with the cloud is becoming more essential. To manage the vast amounts & different nature of data being generated on the shop floor and run AI heavy workflows with low latency, real-time responsiveness and economically, it is essential for complex and large factories to establish a hybrid architecture which includes on premise computation instead of transferring the entire data to the cloud. All this along with the need to connect internal systems like ERPs, WMS, MES, and OT systems from the shopfloor, calls for a new ‘middle layer” we call the “Platform Layer’ that brings all these functionalities together and gives an environment to develop, test, deploy and maintain applications with ease . While this is the ‘middle layer’, the core data infrastructure layer (Connectivity plus UNS) is where the real complexities get solved and the tech moat sits.

We’ll break down each of these layers and explore how the landscape is changing as the worlds of factory equipment (OT) and business technology (IT) become more closely connected. The following illustration shows how data moves from the shop floor up to business decision-making layers.

Core Data infrastructure Layer forms the backbone of the tech stack and can be seen as two distinct layers:

a. Connectivity Layer: is the layer that directly interfaces with the shop floor and receives data via communication protocols directly from sensors, PLCs and SCADA and distributes them to any application that may have subscribed to this data (Called ‘brokering’). While this layer is the ‘data aggregation’ layer in a way, it receives data from multiple protocols and in different formats which makes it heterogeneous and not directly digestible for top of the stack layers creating data silos that don’t interact with each other and hence a layer is needed that makes this data readable.

b. Context plus Unified Name Space (UNS) Layer: While the ‘broker’ manages the real-time data flow, it passes that data onto this layer that consolidates and neatly structures the data for each topic in a single, standardized and hierarchical organized space which makes building & connecting APIs to other systems easy. Unified Name Space is meant to be a contextualized single source of truth for all OT data and acts as a backbone infrastructure for the IT systems to function

Platform Layer:

This is the middle layer that enables data orchestration & integration between the OT and the IT helping bridge the gap between data flow integration and work-flow processes. Companies in this layer act like a dev-ops platform for manufacturers to give them the toolkit to be able to build specific solutions on top of the data infrastructure. They provide a ‘toolbox’ that comes with ready-to-use APIs that connect the OT, peer to peer data across plants and multiple internal systems and provide the infrastructure to enable hybrid operations (marrying on-premise with cloud)[AA19] , all via one platform, on top of which then end-user/enterprise applications can be built easily. The pull for this layer is that it is a one-stop-shop for the client which allows for easier vendor management and faster deployments of multiple use cases across the organizations & plant locations.

Execution and Enterprise Layer:

These together comprise the IT stack and are built on top of the platform layer to provide actual analytics and applications to serve use cases. After the Platform Layer which has now enabled a one-stop shop and seamless connectivity to the OT, the execution layer will schedule and execute production orders via a Manufacturing Execution System (MES) and on the Enterprise Layer this production data can be linked to business systems like ERP.

In our landscape, we have taken the lens of ‘core value-proposition’ as perceived by us to position companies along a spectrum from point solutions to more full stack solutions on the x-axis and aligning them along the factory tech stack value chain on the y-axis. We see this dimension of core value proposition as one of the key drivers for the industry. While this is our key criteria, there are of course many other dimensions that can be used to slice & dice the landscape like e.g. the ICP focus on the range from mid-market to large enterprise, high performance for extreme data loads vs. “normal” environments, focus on “IoT devices in the field” vs machines on the factory floor, discrete manufacturing vs. process industries among others. As we were building this landscape, we faced three core challenges — unravelling the inherent complexities of a highly interconnected data infrastructure for a factory floor & defining the scope of each layer in a way that it didn’t overlap with the previous one & yet correctly capturing the intricacies for that layer and then reconciling that with the exact solutions companies offered given the sometimes blurry language on company websites and pitch decks. The task of defining the value propositions was more nuanced than expected as companies seem to be offering a variety of overlapping but not identical feature sets especially as you go up the stack towards the platform making it harder to place these companies clearly in specific categories and finally, companies seem to be adding to their product suites as they inch towards becoming more full-stack. This trend of moving up the stack and the hypothesis behind it is something that we cover in our discussion below. Companies positioned further to the right on the x-axis, such as SAP, Cognite, and Microsoft, are generally recognized as more established, full-stack solution providers. They often achieve this status through strategic partnerships with players lower in the technology stack. Moving leftward along the axis, the companies become increasingly specialized in specific layers of the stack, with some gradually expanding toward full-stack capabilities.

As can be seen in the chart, companies in this space are taking different approaches. There are players like HiveMQ, Semodia that are focused on brokering the data at the connectivity layer while players like US basedLitmus, having started from the connectivity, have moved upwards to providing full stack solutions. Now positioned as an Industrial DataOps that connects OT to IT, Litmus also provides the foundation for tech giants like Google to link cloud services with manufacturing plants. Germany-based Cybus, a Matterwave portfolio company, positions their software “Connectware” as a vendor-agnostic factory data layer, enabling a more strategic approach to digitalization. The solution realizes all aspects of DataOps and IT/OT integration, prioritizing technological independence and enabling composable, individual technology stacks. Leading manufacturers such as Porsche have built their data foundation with Connectware, integrating cloud and shop floor in production-critical, highly available setups across multiple sites. While these are players that have taken the bottom up approach, players like Reflek.io partner with the likes of Litmus for connectivity to provide platform solutions to manufacturers and have expanded upwards towards the enterprise layer also providing end use cases like a Digital Twin. In contrast, players like Cognite position themselves as ‘built for purpose’ platforms for the Industry and focus on doing just that.

This layered and too some extent also fragmented offering of companies in the space answers to the logic of specialization in an early market that faces quite a broad range of performance requirements and various other customer expectations. It makes a lot of sense to focus resources of individual companies to deliver their piece of the puzzle in the best possible quality. In addition, customers in the manufacturing world have not only very high quality and performance standards but have made their experiences with closed “full stack” systems in which the automation hardware companies were trying to keep them for decades. A modular approach of specialized solutions without too strong lock-in effects across the stack is the obvious conclusion from the initial problem statement of the traditional automation pyramid.

Nonetheless we believe there is significant merit in the strategy for individual software companies of moving higher up the technology stack while still offering standardized interfaces and openness. As companies ascend the stack, they move closer to the end-use case, making it easier to demonstrate tangible return on investment (RoI) based on specific use cases. This proximity to applications is a key attraction for companies operating at or moving towards the platform layer, where clear implementation pathways and direct alignment with customer pain points make budget access more straightforward. In contrast, while the core data infrastructure remains critical and foundational, companies in this layer often face go-to-market challenges. Budget ownership is typically fragmented between Operational Technology (OT) and Information Technology (IT) teams, with little coordination or shared accountability. In our conversations with founders, a recurring theme is the organizational disconnect. IT is typically organized as a company-wide central support function, while OT is defined as a plant-level or factory-specific role. Addressing those two very different functions with a product offering, can surface misalignment and friction, slow decision-making, and often decouple purchasing criteria from the actual merits of the solution. The need to get buy-in from both sides adds inertia and tends to delay the adoption of otherwise high value. Ultimately, we see this partial disconnect between ultimate business value and buying/implementation dynamics as the main challenge to unlock the potential of companies in this space. It’s a call to action for decision makers to re-consider responsibilities, budget allocation and investment rationales to not miss the bus on factory digitization and AI driven operations.

In summary, while platform companies like Reflek.io or Cognite benefit from their strategic positioning to access also use case specific OT budgets, we think that players who own the ‘lowest level of connectivity’ like Litmus, Cybus among others and move up the chain may have a long-term advantage. They enjoy the highest barrier to entry, as well as more attractive economics, both by virtue of their stickiness at the client’s site and their ability to access budgets of the OT and IT organizations.

The landscape as represented above is complex with companies typically operating at multiple layers and there isn’t really a true ‘one-stop-shop’ solution in the industry today leading to more partnership based working models. In addition, it may be harder for companies operating at higher levels (enterprise layer) to move downward toward core connectivity. We observed hyperscalers like Microsoft Azure having tried this strategy of ‘owning the stack’ via the Azure IoT Suite to offer a ‘preconfigured connected factory’ solution enabling integrations all the way down to the connectivity layer and over the years moving back and forth on connecting directly to the OT systems. At the same time as a cloud operator, they partner very actively with players across the stack. Arguably, all of this in turn only makes those controlling the foundational connectivity layer attractive strategic acquisition targets for players further up the value chain either for data or for capability. Nonetheless, we do think more integrated solutions have an edge and there are successful analogs of such players in adjacent markets having created significant value — for example, Mulesoft’s $6.5 billion acquisition by Salesforce in 2018. Public and private market analogs — such as Workato4 ($5 billion) or Confluent5 ($5.5 billion) and — demonstrate investor appetite for similar infrastructure plays. Yet, this gap is still to be filled in a sector generating $14 trillion in product value annually. In the long term, to realize the vision of fully virtualized PLCs and as the need to integrate protocols like MCP for Agentic AI becomes more urgent, the lack of this foundational infrastructure will be felt more acutely and without it, Europe risks falling behind in the wave of re-industrialization, both technologically and economically.

Key Takeaways

1. We observe a gap of focused solutions at the platform layer — a lack of DevOps solutions that allow to develop, test, deploy and maintain applications and at the same time are sufficiently focused on factory environments and use cases. How will this gap be filled — will foundational connectivity players rise up the stack, will purpose-built automation platforms seize the opportunity, or will users just make use of existing and much more generic automation/ data integration platforms?

2. Moving up the stack is arguably easier than moving down as demonstrated by the hyperscalers like Microsoft via their partnership strategy among others and acquisitions of downstream players like Mulesoft by the likes of SAP as analogs from another market. As things heat up in the quest for Industrial AI, could we expect similar dynamics, especially given the fragmented nature of the landscape?

3. Solutions owning the core infrastructure layer and moving up the stack may hold an unfair long-term advantage benefiting from high client stickiness, control over foundational data flows, and access to both OT and IT budgets

4. The organizational disconnect between OT and IT departments may be seen as the major barrier for full-scale adoption of the proposed factory data hub architecture.

Let’s connect if you are building in the space!

Disclaimer: This analysis reflects our current understanding and quite some hypothesis. We certainly don’t claim to have found “the truth” and very much welcome feedback, additional insights, or corrections to our statements. We encourage open dialogue and look forward to a constructive discussion on these topics.

About Matterwave Ventures

Matterwave Ventures is a leading European Venture Capital firm investing in early-stage hardware and software companies, leading the transformation of the European industrial sector towards a more sustainable, sovereign, and digitalized future. By providing capital and support to the continent’s most ambitious founder teams and most relevant technology assets, Matterwave aims to help build category winners that form the backbone of a thriving European industrial ecosystem. The team’s vision is to regain more technology leadership, production capacity as well as energy and raw material autonomy in Europe. Over the last 25 years, the team invested in over 70 companies at the intersection of ClimateTech, DeepTech and IndustrialTech. Matterwave invests “full stack”, from materials and components to complete systems and software solutions. With over EUR 250 million of capital under management, Matterwave typically participates in Seed and Series A financing rounds across Europe with initial investments between EUR 1–4 million and significant reserves for follow-on financing rounds. https://matterwave.vc

Co-Authors: Akshata Agarwal & Robert Gallenberger

About Hitachi Ventures

Hitachi Ventures is the corporate venture capital arm of Hitachi Ltd. With $1.0 billion assets under management, they focus on investing in early-stage and growth-stage technology companies with strategic relevance to Hitachi. With a global network and extensive experience across various industries, Hitachi Ventures supports innovative startups in their journey to disrupt markets and transform industries. The firm’s investment areas span from environmental tech & energy, artificial intelligence, digital technologies, industrial automation and life sciences. Hitachi Ventures typically invests in Seed to Series B financing round globally, with initial investment size between $2–7 million. https://www.hitachi-ventures.com/

Co-Authors: Marina Du & Jan Marchewski

Sources

3 OPC UA is closest to serve as such universal standard for machine-to-machine, though facing multiple practical limitations

4 Workato storms to a $5.7B valuation after raising $200M for its enterprise automation platform

5 Represents market capitalization as of 08/11/2025 close